Ray tracing, the new graphics revolution?

Nvidia is calling it the biggest graphics breakthrough since the introduction of the CUDA architecture: ray tracing. The new RTX graphics cards from the inventor of the graphics processor should make real-time ray tracing accessible to the average user. What's behind this technology, and what does it mean for gamers?

Just after the launch of the new series of professional graphics cards based on the Turing architecture, Nvidia is presenting its new consumer cards. The brand is dropping the GTX designation in favour of RTX for the 2070 and 2080 models. The Turing architecture should finally make real-time ray tracing, previously too computationally intensive, possible. With the new ray tracing cores and CUDA cores brought together on a single card, the new RTX GPUs combine ray tracing and rasterisation.

Ray tracing enables realistic lighting effects and should make games even more realistic. Microsoft has developed a new DirectX Raytracing (DXR) API and Epic Games will be providing real-time ray tracing to Unreal Engine developers this year. Everything seems to be leading us towards a new graphics revolution.

Where does ray tracing come from?

Ray tracing is anything but new. The basic algorithm had already been written by John Turner Whitted in 1979. It then took some time before we were able to display images rendered using ray tracing, as the technology requires enormous computing power. However, ray tracing was used for certain sequences in "Shrek". You may have noticed it for the first time in "Cars" in 2006. But here too, the technology was relatively little used, as rendering a single image required an enormous amount of time. Nvidia launched ray tracing for games in 2008. It took ten years for the first graphics cards supporting ray tracing to be launched on the market. Games such as "Shadow of the Tomb Raider", "Battlefield V", "We Happy Few", "Hitman 2", "Final Fantasy XV", "PlayerUnknown's Battlegrounds", "Remanant from the Ashes" and "Dauntless" already support ray tracing and are very promising.

What distinguishes ray tracing from rasterisation?

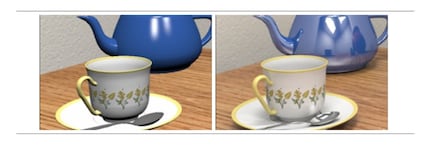

Ray tracing stands out thanks to its highly realistic modelling of a physical environment, but requires greater computing power. Rasterisation, on the other hand, is faster and can therefore be combined with many other algorithms. However, the rendering of rasterisation is nowhere near as impressive as that of ray tracing.

Source: capture d'écran venant de «Raytracing in Industry» d'Hugo Pacheco

Rasterisation (polygon rasterisation)

A 3D scene is made up of several elements: 3D models of triangles (polygons) covered in textures and colours, the light that illuminates the object and the viewpoint from which the scene is observed. In fact, the raster image is made up of a grid of pixels created from the point of view. The raster graphic determines which pixels are covered by each of the polygons in the image. The colour of the polygon is then applied to the pixel. The 3D engine starts with the most distant polygons and progresses towards the camera's angle of view. When one polygon overlaps another, the pixel first takes on the colour of the polygon in the background and then the colour of the polygon in front.

Other algorithms such as shaders or shadow mapping are then added to the process to make the colour of the pixels more realistic.

Rasterisation is limited: an object placed outside the field of view, for example, will be ignored. It could, however, cast a shadow on the scene being viewed or even be visible elsewhere. Some of these effects can be rendered by additional algorithms such as shadow mapping, but the scenes will never look like the real thing.

That's because rasterisation doesn't work like our eye. By contrast, ray tracing is inspired by nature.

Ray tracing

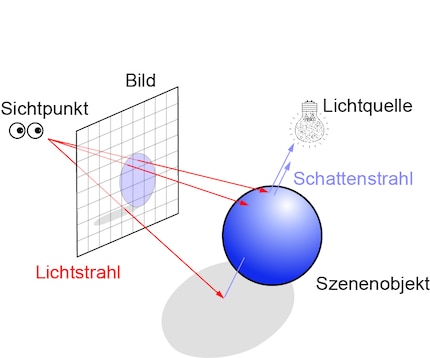

Ray tracing simulates the reverse path of light to our eye. When we observe a scene, our eye receives rays from the light source that are reflected by the various objects around us.

Ray tracing technology involves sending a ray of light to each pixel from the point of view. The point of view is your perception of a two-dimensional image. The ray extends along a half-line until it encounters a three-dimensional element of the image to be rendered. This point of impact is used to determine the colour of the pixel.

In fact, ray tracing rendering is a kind of reverse light reflection, as the rays start from the point of view and go towards the light source. Incidentally, the algorithm behind John Turner Whitted's ray tracing is based on the principle of the reverse return of light. It is not suitable for rendering images, as it performs too many unnecessary calculations.

Determining the colour of a pixel is not enough, however, to obtain a realistic rendering; the illumination of the pixel must also be determined. This is the role of the secondary rays, which arrive after the primary rays and have determined the visibility of the various objects in the scene. To calculate the lighting effects of a scene, the secondary rays are emitted in the direction of the different light sources. If the rays are blocked by an object, this means that the object is in the shadow cast by the light source in question. The sum of all the secondary rays that reach a light source determines the amount of light falling on an element in the scene.

And that's not all. For an even more realistic rendering, reflection and refraction must also be taken into account. In other words, the amount of light reflected at the moment of impact and the amount of light that penetrates the material. Again, rays are sent to determine the final colour of the pixel.

In summary, ray tracing uses several types of light rays to render an image. The primary rays determine the visibility of the object. The secondary rays are made up of shadow, reflection and refraction beams and establish an illumination pattern.

If all this was a bit too theoretical for you, I recommend this tutorial (in English). You'll first learn the theory from the video, then put it all into practice.

The benefits of ray tracing

As said earlier, rasterisation allows you to achieve relatively realistic lighting effects. But ray tracing makes it possible to render reflections perfectly, without getting bogged down in complex additional algorithms. Everything is calculated directly by the rendering algorithm. The same applies to inter-reflections, for example the reflection of a rear-view mirror on the body of a car. With rasterisation, it's very difficult to reproduce this effect.

Transparency effects are another advantage of ray tracing. The correct calculation of transparency is particularly complex with rasterisation, since it depends on the rendering order. To get good results, transparent polygons would need to be sorted from furthest from the camera to closest before rendering is calculated.

In practice, this process requires too much computing power and errors are not inevitable, as the sorting would be done on polygons and not pixels. Ray tracing, on the other hand, makes it possible to render beautiful transparency effects.

It is also necessary to add the calculation of shadows In the rasterisation, it is shadow mapping, among other things, that enables them to be rendered in the image. But this requires a lot of memory and aliasing is a problem. Ray tracing avoids any problems without even needing an additional algorithm.

Graphic evolution or simple evolution?

Let's be honest: Nvidia isn't entirely wrong to declare that ray tracing could be the biggest graphics revolution since the CUDA architecture. Sure, games are looking better and better thanks to 4K resolution, improved shaders and so on. But when you consider that the benchmarks for Crysis (launched in 2007) are still running, it's clear that we haven't seen a real graphics revolution for ten or twelve years. Whether consoles are to blame or not remains to be seen. The fact is that something is finally happening in terms of graphics for gamers.

And that something might well be ray tracing. The first game demos with ray tracing are frankly impressive. I feel like I'm in an animated film, except this one I can control. The best thing is for you to see for yourself.

As mentioned at the beginning of the article, RTX GPUs rely on a combination of ray tracing and rasterisation. And indeed, the three demo videos don't just show the impressive rendering of lighting effects using ray tracing. If you take a closer look at the Battlefield V video, at around 20 seconds you'll see a yellow tram carriage in the background. You can't see any lighting effects, although the surface of the carriage should reflect the explosion to its left. Nvidia calls the combination of these two technologies hybrid rendering.

The demos are breathtaking nonetheless, and we're left to rejoice in the new graphics revolution.

The new GPUs available from digitec

RTX 2080 Ti

RTX 2080

From big data to big brother, Cyborgs to Sci-Fi. All aspects of technology and society fascinate me.

Practical solutions for everyday problems with technology, household hacks and much more.

Show all