Antutu Benchmark manipulation with a kitchen and some motivation

The standard is rubbish. Antutu Benchmark has more errors than anyone would like. An analysis and an example with a refrigerator.

The Antutu Benchmark app and its sister Antutu 3D Bench are considered the de facto standard for smartphone performance tests. Antutu stresses your smartphone in all kinds of ways, testing graphics performance, scrolling speed and intelligence in image analysis, among other things. The end result is a six-digit number that tells you "Your smartphone is 265,852 good".

The problem is that the scores are extremely easy to manipulate.

That's why I decided to put this to the test and show you a way to manipulate Antutu so that the app spits out significantly higher numbers. I compare the Xiaomi Black Shark gamer phone with itself. Plus a few reference figures from the Huawei Mate 20 Pro, because the differences say a lot.

The setup: The two test phones

It makes a difference in the scoring whether your phone has to run a lot of apps, has Wi-Fi on or off or, or, or, or... in short: The more your phone has to do in the background, the worse it will score in the Antutu benchmark. Or maybe not. Because there is another problem. The official website Antutu does not provide any information about how exactly it is tested and under which factors. Simply "the most professional benchmark" and that should be enough.

The test phones are therefore provided with the largest possible differences.

Xiaomi Black Shark: The virgin

The Xiaomi Black Shark is freshly formatted. It is the smartphone on which I will manipulate the scores by simple means. It is currently ranked 7th on Antutu's leaderboard with a global score of 291,099, because Antutu logs all the results and then - again without giving any details or methodology - calculates an overall score for the global reference.

Huawei Mate 20 Pro: The pocket phone

The Mate 20 Pro from Huawei is currently the frontrunner on Antutu with a global score of 305,437, with the Mate 20 Pro, the Mate 20 and the Mate 20 X - smartphones with the Kirin 980 system-on-a-chip (SoC) - occupying the top three spots. This is interesting because the SoC comes with two Neural Processing Units (NPU). In everyday use, these two NPUs do nothing other than utilise more system resources for apps that you use frequently. In theory, it looks like this: The more often I fire up Antutu, the higher the score. However, experience has shown that this only works over a longer period of time and not after three tests on a Friday morning.

The Mate 20 Pro is already in use. Apps such as Instagram, email and WhatsApp are synchronising in the background. Wi-Fi is off, mobile data is on.

The test begins

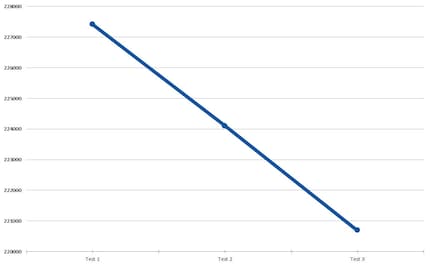

Before I start with the manipulation, I need to know what the Black Shark is capable of. I run Antutu three times in a row at my workplace and in a meeting room. The scores:

- 227 410

- 224 099

- 220 689

In the graph I see a linear decrease. Note: The graph is in the style of the Swiss nationalist-conservative party SVP: I have faded out the range from 0 to 220 000 so that the fluctuations are visually as pronounced as possible. This also applies to the other charts in this article. At the end, I draw a picture in which the Mate 20 Pro loses by a large and embarrassing margin.

The test is slowed down by the temperature of the device, because the hotter your smartphone runs, the more likely cooling systems are to kick in. Their job is to make sure your smartphone doesn't burst into flames when you push it hard. Since power leads to an increase in temperature, this power is throttled back until the device has reached an acceptable temperature again.

The thing with temperature

The easiest thing to manipulate is the temperature. If I cool a smartphone, it can run at full steam for longer before the cooling system throttles performance. So I put the Black Shark in a grip, also known as a snack bag, and then put it in the fridge. After 30 minutes, I run Antutu again.

The score: 284 089

Thanks to the external cooling provided by the refrigerator, the phone has become 24 per cent faster or better according to Antutu.

Let's see if I can take this to the extreme. Black Shark back in the Grip and then in the freezer. 30 minutes later: 285,853, so it seems that system performance is kept the same from a certain temperature, or hardware performance reaches its zenith in the mid-280,000s.

Of course, extreme cooling has an impact on the battery life of the Black Shark. But Antutu doesn't take into account the fact that I use up 60% of the battery within an hour.

With increasing temperature, i.e. three tests immediately after the freezer at room temperature, the score normalises again.

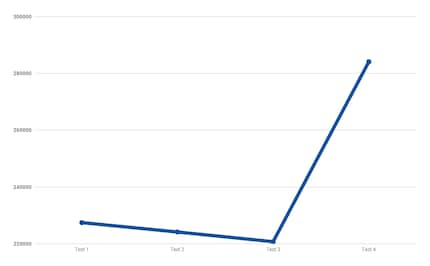

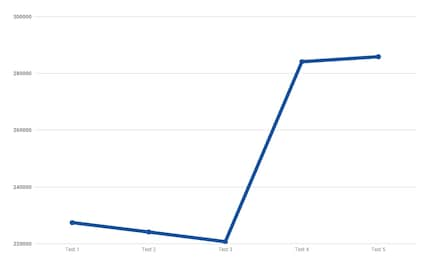

The values in detail:

- 227 410

- 224 099

- 220 689

- 284 089

- 285 853

- 283 702

- 250 820

- 230 541

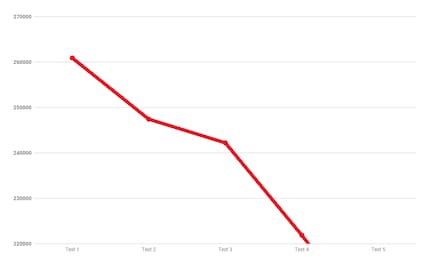

The Mate 20 Pro falls below 200,000

The Huawei Mate 20 Pro doesn't have it easy. I only test it at room temperature, pressing "Test Again" as soon as the previous test is over. The Huawei smartphone gets hotter and hotter and five tests later it drops below the 220,000 brand.

The score: 199 911.

The test results in detail:

- 260 886

- 247 398

- 242 193

- 221 842

- 199 911

The unanswered questions

It is obvious that Antutu is of little use as a benchmark tool. The most serious problem with the app is that the methodology for determining the global score is not transparent. If this is an average value, then tests by others must deliver astronomical values. An example: The Mate 20 Pro has transmitted its 199 911 to Antutu. You agree to this when you download the app. The global score is 305 437, which means that at least one test must have finished with a score of 410 963. Or one million tests with around 306,000. But if Antutu eliminates such low scores or somehow mathematically normalises the system temperature, then the app developer doesn't communicate this.

It's far too easy to manipulate the scores. If I can fake 24 per cent more performance on my way to the coffee machine, then there's something wrong with the benchmark. If I can artificially depress a score by simply running a test repeatedly, then the test isn't worth much.

Furthermore, I can use the simplest graphical means to draw a picture of a phone that is more of a distorted image than a realistic image. I have not only simply cut out an area above, but also used colours that contain certain suggestions. Red, in particular, is associated with warning and danger. My excuse for the choice of colour: It's CDCI-compliant with the digitec colours. Just like the political party, I can say: "If you read something into it, then that's your business".

Then there's the matter of the global scores. Even with my fridge methodology, which I think is excessive, I didn't achieve a value that was above the global score of 291,099 or even close to it. How did they calculate that? Under what circumstances do values come together that tear out my lame 284 thousand?

The test brings the sobering realisation that benchmarks are not quite as simple as they pretend to be and that a number that is easy to read is not necessarily meaningful. But we currently have no better alternative.

So, that's it. Stay alert and scrutinise everything.

Journalist. Author. Hacker. A storyteller searching for boundaries, secrets and taboos – putting the world to paper. Not because I can but because I can’t not.

Interesting facts about products, behind-the-scenes looks at manufacturers and deep-dives on interesting people.

Show all