Guide

Ghost of Yōtei: 23 helpful beginner’s tips for this samurai adventure

by Domagoj Belancic

It's one of the big questions in computer science: can computers solve any problem in a reasonable amount of time? Yes, says a new technical paper - and it's generating a lot of enthusiasm.

Scientists love to categorise things - at least on an abstract level. Chemists, for example, categorise all elements in the periodic table. Biologists categorise the earth's living creatures into families and species. And mathematicians have, after a long search, found and listed all infinite simple groups in the past decade.

Computer scientists are also looking for order, albeit a little differently: they sort problems according to their complexity. They have already discovered in the past that certain tasks are very easy for computers to solve. The computing time required to solve them only increases slowly (polynomially) with the length of the problem. From the point of view of computer scientists, such computational tasks therefore belong to the complexity class P.

Problems that are easy to check, even if they are difficult to solve, belong to the class NP. One example is a challenging Sudoku puzzle: it is difficult to find the correct solution. However, once you have worked it out or are presented with a completed sheet, it is easy to check whether it is correct.

The fun starts with P and NP: computer scientists have now created a hierarchy of complexity classes, from very simple to extremely sophisticated. The quantum computer has brought a twist: it enables new strategies when it comes to checking the correctness of the given solution to a problem.

An open question in complexity research has always been how complicated a problem can be at most so that a computer can still check it in a reasonable - i.e. polynomial - time. A team led by Zhengfeng Ji from the University of Technology, Sydney, has now found a surprising answer that is currently causing quite a stir among experts. According to the theory, even the most difficult problems in computer science can be tackled in a reasonable amount of time.

"I can't think of a single person who would have expected this," writes the respected computer scientist Scott Aaronson from the University of Austin in his blog. Should the work stand up to scrutiny by independent experts, it would be one of the biggest surprises in theoretical computer science this century.

Since the 1970s, computer scientists have been trying to subdivide complexity classes ever more finely. To this end, they have repeatedly identified more complicated problems as NP. The main scope for more complexity was the verification of the solution. In some cases, the time required for this no longer increases polynomially with the length of the task, but rather exponentially. This makes things much more difficult than a Sudoku puzzle, the solution of which is presented to you.

In order to be able to classify such problems, computer scientists introduced so-called interactive proof systems in the 1980s. They imagined an omnipotent computer, the "prover". This computer is always able to provide the solution to a problem, regardless of how complex it is. It can therefore also calculate problems that are far more complicated than Sudoku puzzles. It has infinite memory capacity and can, in principle, calculate for an infinite amount of time.

However, the prover is not trustworthy, which is why you also need a verifier to recalculate the result provided. In Sudoku, it would be the verifier who ensures that a solution is correct. Unlike the prover, the verifier is a realistic computer that has limited resources. This means that its calculation time can only be polynomially dependent on the size of the task.

Because the solution provided by the prover may be extremely long and complicated, the examiner asks the prover various questions in an attempt to ascertain its veracity. Consider, for example, a table in a pitch-black room with several identically shaped wooden blocks lying on it. The task is to sort them according to their colour. The demonstrator - omnipotent as he is - has no problem with this. Despite the darkness, he divides the blocks into two piles.

The examiner must now ask questions to find out whether two randomly selected blocks from two different piles are really different colours. In this case, the examiner could say that one block is red and the other is blue. But is that true? Or is the demonstrator lying? To find out, the examiner can hide the wooden blocks behind his back, swap them and ask the demonstrator which one is the red one. If the experiment is repeated often enough, the person giving the evidence will either be wrong in around half of all cases if they have lied, or will always answer correctly - provided they have told the truth.

Computer scientists have discovered that the solution to some tasks can only be checked for correctness using such a questioning strategy. If these problems are taken into account, the complexity class NP expands to "IP". There is even a way to tackle even more complex problems: Allow not just one reasoner, but several. They can exchange information while they are working on a task. However, as soon as they deliver a result and the examiner analyses it, they are separated from each other.

In this way, the task that the examiner then tackles using the question-answer strategy can be even more complicated - and still be checked in polynomial time. This is because it is easier for the examiner if he can question two or more instances: Just as a police inspector has a better chance of convicting witnesses of lying if he has several separate interrogations, more challenging problems can be solved in this way. The associated complexity class "MIP" is even greater than IP, as computer scientists discovered in 1988.

After this result, the field of interactive proof systems came to a standstill. At least until some researchers wondered what would happen if the two provers were not classical computers, but quantum computers that are coupled to each other via quantum physics. This is made possible by the phenomenon of entanglement, which Albert Einstein had already racked his brains over.

He called it "spooky action at a distance", because if you measure the state of one of two entangled objects, you also determine the state of the other - regardless of how far apart they are. Initially, this fact caused physicists a stomach ache, but it is now known that no information can be transported in this way.

In the beginning, complexity researchers did not pay much attention to the possibility of entangled provers, as it was assumed that the associated complexity class MIP* would be smaller than MIP or even IP. After all, the advantage of MIP is that the omniscient computers are questioned separately. If, on the other hand, they are entangled, the response of one affects the state of the other.

"The natural reaction of everyone, including me, was to give the provers more power now," recalled computer scientist Thomas Vidick of the California Institute of Technology, co-author of the new paper, in Quanta Magazine some time ago. After all, the evidence leaders could use the entanglement to coordinate their answers during interrogation by the examiner.

However, the first big surprise came in 2012: Vidick proved with his colleague Tsuyoshi Ito from the University of Waterloo that entangled quantum computers can also be used to check all problems from MIP in polynomial time. This means that both complexity classes are at least the same size.

The next twist followed in 2019. Physicists Anand Natarajan from the California Institute of Technology and John Wright from the Massachusetts Institute of Technology discovered that the quantum computer class MIP* is even larger than MIP. This means that entanglement does not have to be a disadvantage, but rather the examiner can actually use it to their advantage - and thus cross-check even more complex tasks than expected.

The trick is to identify suitable questions through interleaving. In other words, the evidence providers first question themselves. At first glance, this may seem paradoxical, but because the two evidence providers are intertwined, they can run through several answers at the same time and thus deal with more conceivable verification steps. They pass these questions on to the examiner.

The examiner then only has to guarantee during the actual test that the questions are representative and meaningful and that the answers do not lead to any contradictions. In this way, they can carry out the test in a reasonable amount of time; the computing time required simply increases polynomially with the length.

This strategy of "correlated questions" therefore means that a proof can be checked more quickly. However, care must be taken to ensure that the answers given by the provers are not also intertwined. This would be tantamount to two suspects colluding during an interrogation. Natarajan and Wright solved the problem by utilising another bizarre concept from quantum mechanics: the inherent uncertainty of the microcosm, according to which two "complementary" properties of an object, such as position and momentum, cannot be determined with arbitrary precision at the same time.

If you have measured the speed of a particle and then determined its position, you no longer know how fast the object is now. For this reason, the two demonstrators are only allowed to carry out complementary measurements so that their answers do not influence each other. If you ask the first quantum computer for a calculated value, this deletes the corresponding information from the second quantum computer.

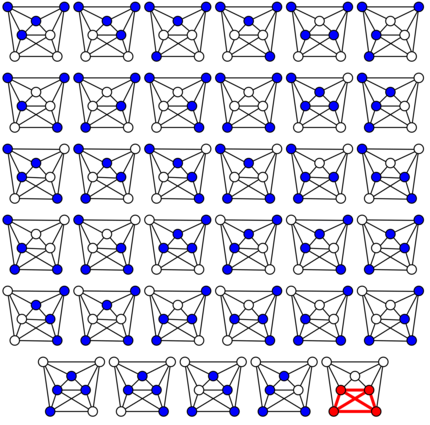

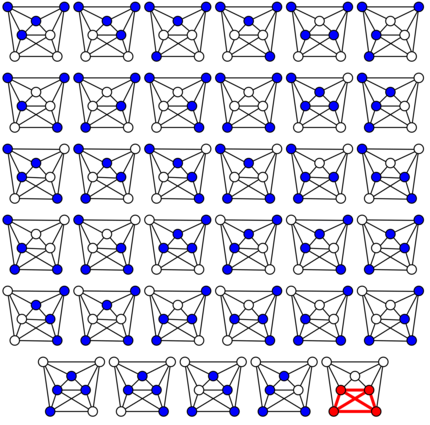

Now computer scientists knew that MIP* exceeds the complexity of MIP. However, it was initially unclear how large the class actually is. How much better can solutions be checked by asking entangled quantum computers? In fact, this seems to maximise the number of tasks: As Zhengfeng Ji together with Natarajan, Vidick, Wright and Henry Yuen from the University of Toronto have now shown in their sensational technical paper, MIP* contains all computable problems in computer science.

According to the proof, MIP* is identical to the huge complexity class RE. It includes all decision problems (those whose answer is yes or no) that a computer can answer in the affirmative in finite time. This includes, among other things, the famous halting problem: This involves determining whether a computer will ever come to a halt when calculating a task - or continue calculating forever.

The British computer scientist Alan Turing proved in 1936 that there is no algorithm that can solve the halting problem in general. However, this does not contradict the latest publication. This is because the work by Ji and his colleagues states that an ordinary verifier can check in polynomial time the statement of two entangled omniscient quantum computers that claim that a computer will come to a halt for a certain task.

This alone is remarkable. But the computer scientists' result also has implications for mathematics and quantum physics. This is because it turns out that the embedding problem formulated by Fields Medal winner Alain Connes in the 1970s was wrong - something that many scientists had not expected. Further hypotheses depend on this, which consequently cannot be correct either.

The embedding problem deals, among other things, with so-called operator algebras, which belong to the mathematical field of functional analysis, but also appear in quantum mechanics. And indeed, there is a physical statement that is equivalent to Connes' problem: all quantum mechanically permitted correlations can be approximated arbitrarily well by a finite number of entangled qubits. Contrary to what most scientists expected, the result of the theoretical computer scientists shows that this assertion is false.

Contrary to what is often assumed, an infinite system cannot always be approximated arbitrarily precisely by a finite one

This has drastic consequences, for example when it comes to the difference between finite and infinite systems. Contrary to what is often assumed, an infinite system cannot always be approximated exactly by a finite one. In their publication, Ji and his colleagues give an example of a situation in which two people are playing a game. If both are infinitely strongly entangled with each other, then they win with a chance of one; if, on the other hand, they are only finitely entangled, then the probability is at most one and a half.

However, unlike the inequalities formulated by John Bell in the 1960s, such a game cannot be tested in the laboratory, as there is no way to entangle two systems infinitely with each other. However, the work proves that complexity theory - contrary to what is often claimed - is not a completely remote field that stands alone, but has an impact on other areas, even if they are as abstract as theoretical quantum physics.

We are partners of Spektrum der Wissenschaft and want to make well-founded information more accessible to you. Follow Spektrum der Wissenschaft if you like the articles.

[[small:]]

Experts from science and research report on the latest findings in their fields – competent, authentic and comprehensible.

Practical solutions for everyday problems with technology, household hacks and much more.

Show all