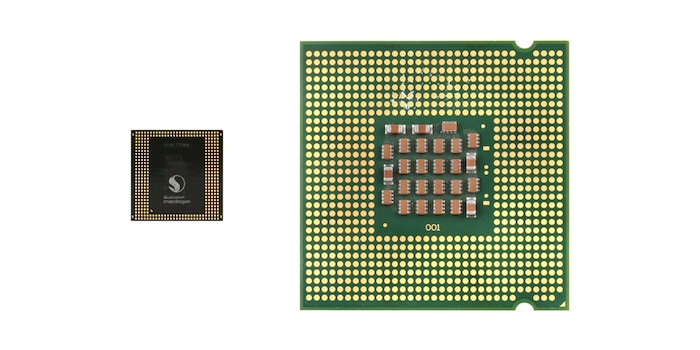

David and Goliath: the SoC in smartphones and the CPU in computers

We spend our days typing on our computers and our smartphones without necessarily realising that the technologies of these two types of device diverge. In fact, the performance and architecture of the essential components are completely different.

The classic computer has an integrated processor, whereas the smartphone is equipped with a SoC ("System on a Chip") that brings together its main elements - processor, graphics card, memory controller or even Ethernet or Bluetooth interfaces - on a single chip.

The architecture of a processor

Why is it that your smartphone, which has more processor cores and a higher clock speed, performs no better than your old notebook? Because of its processor architecture. x86 and ARM are the two most common architectures. You'll find x86 in laptops and desktops , and ARM in smartphone SoCs.

While smartphones and tablets have brought RISC-family ARM processors to the fore in recent years, their development actually dates back to the 1980s. British company Arcon marketed its 32-bit, 4 MHz prototype in 1983. At the time, the acronym "ARC" stood for "Acorn Risc Machine". It now stands for "Advanced RISC Machine". Success was not long in coming, and mass production at a clock speed of 8 MHz began in 1986.

The x86 architecture was created at the end of the 70s. The Intel i8086, the first processor with the x86 instruction set architecture, was introduced to the market in 1978. Hearing "Intel 80286", "Intel 80386" (AMD Am386) or "Intel 486" (AMD Am486) can make some of us nostalgic. I actually took my first steps in computing with a 286, on which I learned DOS commands and played games whose graphics had nothing to do with what is done now.

.

Different architectures

ARM processors were designed to consume a small amount of electricity and have simple instruction sets. X86 processors, on the other hand, are primarily designed for high performance and throughput. The differences between the two processor families are varied. They relate to computing power, electricity consumption and end-user software.

For what computing power were the processors designed?

The name of the ARM processor family already gives us some clues, since "RISC" refers to "Reduced Instruction Set Computing" or "processeur à jeu d'instructions réduit" in French. The processor keeps instruction sets simple and their number as small as possible. This simplicity has certain advantages for hardware and software engineers. Simple instructions require fewer transistors to be activated, which increases the surface area of the chips and reduces their size. On the other hand, they require more memory and increase execution times. The processor tries to compensate for this weakness with higher clock speeds and a strategic pipeline (division of machine instructions into several stages).

The x86 processors in the CISC family work on the opposite principle, since "Complex Instruction Set Computing" means "processor with a complex instruction set". Intended for complex tasks requiring great flexibility, they are capable of performing calculations between various processor registers without the software having to load the required variables beforehand. Multiplications with floating-point numbers, complex memory manipulations and data conversions are among the many common operations for these types of processors.

In addition:

Unlike x86 CPUs, ARM CPUs include only three instruction categories.

- The memory access instructions (load/store)

- Arithmetic or logic instructions intended for register values

- Instructions intended to modify program flow (jumps, subroutine calls)

Power consumption

As the battery size of mobile devices limits its capacity, the power consumption of an SoC is a decisive factor. For example, the SoC in your smartphone consumes less than 5W. It almost never needs to be actively cooled, which saves even more energy.

In contrast, a high-end x86 processor can easily consume 130W due to its complexity.

Compare David and Goliath by coding a video

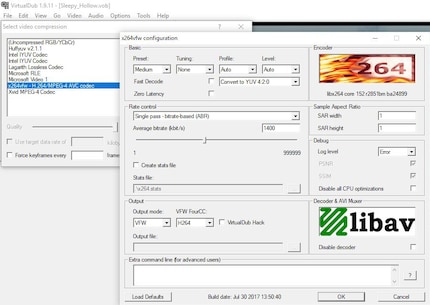

Comparing the two architectures doesn't really make sense, as each has its advantages and areas of application. But I'd still like to know how the performance of the processors differs. So I ripped the Sleepy Hollow DVD and converted it from MPEG-2 to H.264. In other words, I'm reducing this 6.3GB film to around 1400MB, and doing so at virtually the same quality.

The devices in my duel:

- A 2012 Lenovo X220 notebook with Intel Core i5 2520M, 2 cores, 2.50 GHz base clock speed (max. of 3.20 GHz in turbo mode)

- A Blackberry Priv smartphone from 2015 with Qualcomm Snapdragon 808, 6 cores, up to 2.0 GHz clock speed

I of course make sure that the same codec and bitrate are set on both devices. I convert the film at 1400 kbit/s (using decoding software) in one pass just to test (we normally do at least two to improve the quality of the video). I don't touch the audio tracks, I leave them in DTS format.

Converting on the notebook

I use VirtualDub. I leave the black border on the video in order to preserve these conditions on the smartphone afterwards (so I can't trim or crop the image).

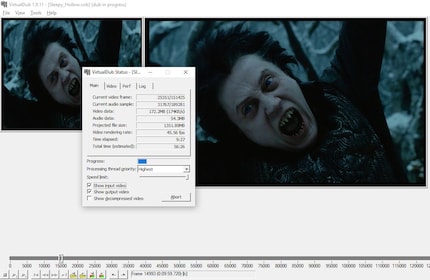

My old Core i5 2520M spends about an hour on it. In 2002, the operation took eight hours with my Pentium. I let it convert all night.

Conversion on the smartphone

It's hard to find an application that doesn't crash or give me an error message when converting a 6.3GB file. I ended up choosing "A/V Converter".

My smartphone gets to work and manages to convert 10 frames per second. I wait 5 minutes before the app displays an estimated total duration of 4 hours and 3 minutes. [[image:13172508 "The smartphone needs four times as long as the notebook."]]

Summary

My test shows that the SoC of a smartphone cannot be compared to the CPU of a standard computer. The time it takes to perform the same operation on the smartphone illustrates just how little our trusty mobile companions can do.

On the other hand, SoCs perform very well for their low power consumption. My i5 microprocessor consumes 35W, which would be enough to power 7 SoCs. If they were all connected, they could convert faster with the same power consumption as my computer's CPU.

I find my muse in everything. When I don’t, I draw inspiration from daydreaming. After all, if you dream, you don’t sleep through life.

Interesting facts about products, behind-the-scenes looks at manufacturers and deep-dives on interesting people.

Show all