Background information

True Black - Saving electricity on a low flame

by Dominik Bärlocher

Google shows what we can expect in the near future. Most importantly, spoken language and text are moving closer together. Google wants to break down many barriers with this. There are also two new smartphones, a dark mode for Android Q, a new home hub called Next Hub Max and more.

Google I/O is a festival for developers and enthusiasts. And an opportunity for the search company, which is much more than just a search company, to showcase itself. Developers such as Kevin Barry, developer of the Nova Launcher, can take part in workshops and gain an insight into the open Android software and its possible applications. I suspect the bars in the area around the Shoreline Amphitheatre in Mountain View, California, will also be pleased.

For us overseas, however, the I/O keynote is of great importance. Because we can see what innovations and changes Google is planning for the near future in terms of hardware, services and software.

Google has already let slip that the operating system for wearables, WearOS, will be receiving an update. In a blog post, Frank Deschenes, Product Manager, describes how WearOS will behave in future.

New are the tiles. These can be accessed simply by swiping on the smartwatch and show you the following data on the full screen:

You can arrange the tiles however you like. The update that brings you the Tiles is due to be released in the course of May.

Another blog post describes the new look of the navigation and general car smarting software Android Auto. Little has changed in terms of functionality, as the music you were listening to on your smartphone continues to play in the car. However, the look is getting darker, which goes hand in hand with the dark mode trend that is being rolled out to more and more Google apps this year. In dark mode, the background is not bright white, but dark grey for Google, HEX #424242.

Nowhere is dark mode more important than behind the wheel. It's nice on smartphones and saves some battery, but in the car it can - to put it bluntly - make or break your life. Because if you have to look at a bright display at night, you lose your night vision. It only takes a few seconds for your eyes to get used to the darkness again, but even then your night vision is not what it was before you looked at the bright display. These few seconds can make the difference between an accident and not. But it would be perfect if Google could go back to AMOLED black, i.e. HEX #000000, because then the pixels of the screen background under Android Auto - preferably under everything in Android - would not be supplied with power. In other words: less light in the Cockpit, your night vision is spared. But at least: a decent dark mode on Android Auto is a solid start.

At the time, we remember that the Pixel devices from Google were not yet Pixel, but were called Nexus. They were cheap and good. So good, in fact, that some users still use them today. With the Pixel era, the phones then became massively more expensive and rose from a useful and inexpensive device to a prestige object with a hammer camera. Google is now taking a step back and releasing the Google Pixel 3a and the larger 3a XL, two smartphones that compete in the mid-range.

The larger Google Pixel 3a XL comes with a screen diagonal of 6 inches, i.e. 15.42 centimetres. Unlike other AMOLED screens, Google is rumoured to be using a so-called gOLED display here. That stands for "Google OLED", perhaps. Experts disagree, including on what makes a gOLED display different from an AMOLED display. Our colleague Jan Johannsen has already published a complete test online.

Artificial intelligence is a top priority at Google this year. Android is set to become even smarter and more integrated. "Google should become even more helpful", it says. One of these features is "Full Coverage" in Google News, where the option gives you multiple perspectives on a topic. Google Search is set to be equipped with this over the course of the year and Google wants to prevent the belief in so-called fake news and bring some balance to the media world, among other things.

Of course, the Assistant is also getting faster. With the "Continued Conversation" feature, you don't even have to press "Hey Google" to activate the Assistant. However, one feature is still missing: the AND operator. You can ask x questions at breakneck speed, send text messages and emails, check weather reports and more, but only one thing at a time. "Hey Google, switch on the light and send Andrea a text message with the content "I like dolphins"" is not possible. This is because Google still wants to execute the one command "switch on the light and text Andrea 'I like dolphins'" and not the two commands that are linked with the "and". At least that's how it seems after the demo on stage.

The revised Assistant is expected to arrive in autumn with the Google Pixel 4.

Google Search is undergoing a major update. If you search for something, you can display a three-dimensional view and then also get these objects in reality via augmented reality.

A great white shark appears in 3D on the stage. The animation still looks a little wooden and the textures could be better, but the technology is impressive. I can't imagine from the ship why I'd want a shark in my office, but I'm going to give it a try anyway, because Google's search has only been visually refreshed on the surface for what feels like ages.

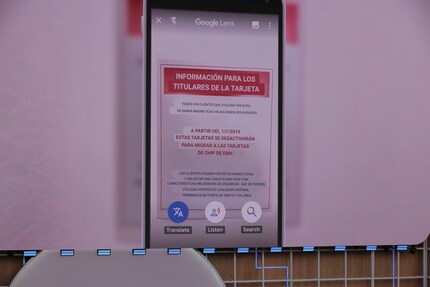

Google Lens, Google's virtual eye with interpretation capabilities, is getting smart. When you hold a menu in front of your smartphone camera in a restaurant, Lens shows you the most popular menus, calculates the tip and divides the bill by the number of guests. This is possible with data correlation with data from Google Maps.

This is a nice gadget, but with Google Go, Google also wants to help illiterate people in the world. If you click on Google Lens in the Google Search Bar, the voice of your Google Assistant will read text to you. The text passages that the app is currently reading are also highlighted. Translations are of course also possible, both in picture and sound.

It's clear that Google is unifying its apps, harmonising its services and allowing and encouraging data correlation between all the knowledge that the search company has collected over the years. Is that creepy? Yes, it is. Because for the first time, you see what "A little scrap of information here and a little scrap of information there" can do when the data is brought together.

But no one can deny that this is technologically impressive. But the applause from the audience makes me a little sick to my stomach. Is this really what we think is good? So, so objectively good, and not just technologically impressive?

Apropos, a small side note: Google means "German" and "German language". There is no such thing as a language called "German".

Google Duplex is Google's solution for telephony. In other words, you give your Google Assistant an order. So "Hey Google, reserve me a table for three at the Tales Bar in Zurich at half past eight". If Google Duplex worked in Europe, Duplex would call Tales and reserve a table there in a human voice.

This is now also possible in the browser. Duplex understands your travel plans, among other things. It can therefore read out your calendar data, display your flight tickets and suggest car hire and the like, for example, and already fill in a mountain of data. Essentially, all you have to do as a user is click on "Continue" and "Yes".

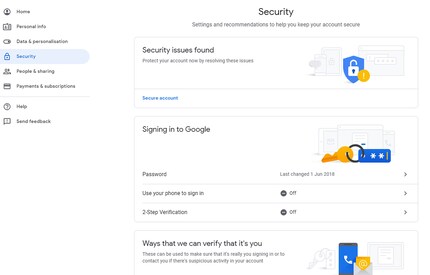

Google is aware that the huge amount of data used to get your personalised stuff can lead to bad things in the wrong hands. That's why Google has adjusted the privacy settings.

You can now set the security settings for the feature you are currently using by clicking on your avatar image. The features, including a separate incognito mode for search, are due to roll out later in the year.

The topic that defines Android Q is security and privacy. It is only briefly mentioned, but nevertheless: a lot is to happen under the bonnet.

Google also supports natively foldable smartphones. We remember: Google did not participate in the Android core with the notch. A sign that foldables are the future? Perhaps. But where Google is certain that the future lies is in 5G. The standard is natively supported by Android Q.

As fun as that sounds, and as cool as all the convenience features being introduced are, something else is significant. Federated Learning hopes to open up a whole world.

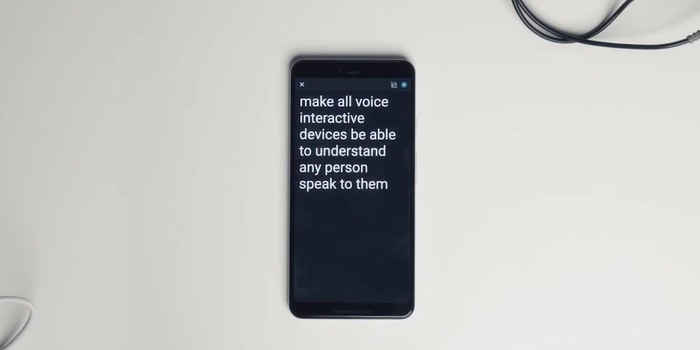

To this end, Google is introducing "Federated Learning". This means that data from all corners of Google is analysed and then applied to things like predicting the next word in Google's GBoard smartphone keyboard. The Cloud Speech API transcribes speech to text in real time. Live Caption will even extend this to videos. In other words: you watch a video and the AI automatically calculates subtitles for you. This should also be possible with telephone conversations. Imagine being deaf, mute or deaf-mute. Impressive.

Even better: the calls remain on your device and therefore private. Without any data connectivity. The neural network behind the technology runs locally on your phone and is only 80 megabytes in size. It is system-wide, i.e. not app-dependent.

Google is not stopping there. Sure, there are already recordings of deaf people and those who have had a stroke. But what about those who communicate non-verbally? Google is working on it. And to be honest, I'm in favour of it. Very strongly in fact.

Google opens the project. If you know someone who has difficulty articulating and would like to help, you can fill out a form and maybe lend a hand.

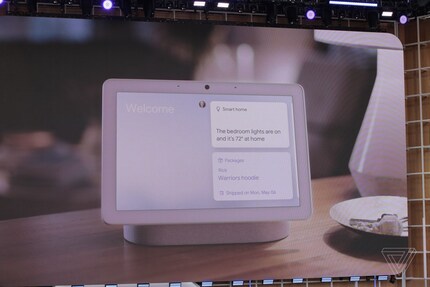

The Google Assistant has a new home. All Home hardware is now called Nest, as the teams of the former start-up Nest and the Google Home teams have been merged. The Google Home Hub is now called Nest Hub. The new Nest Hub Max has a 10-inch display and a camera and combines all devices such as the Nest Cams and everything such as Smartlights or Smartlocks. Your smartphone already does this in the Google Home app, but dedicated hardware could still offer a lot of bonuses.

Apropos, the Nest Hub Max has a switch on the back that cuts the power supply to the camera and microphone. Finally a security feature that is really good and really necessary.

Journalist. Author. Hacker. A storyteller searching for boundaries, secrets and taboos – putting the world to paper. Not because I can but because I can’t not.

From the latest iPhone to the return of 80s fashion. The editorial team will help you make sense of it all.

Show all