Background information

Now it's going digital: History of computing, part 3

by Kevin Hofer

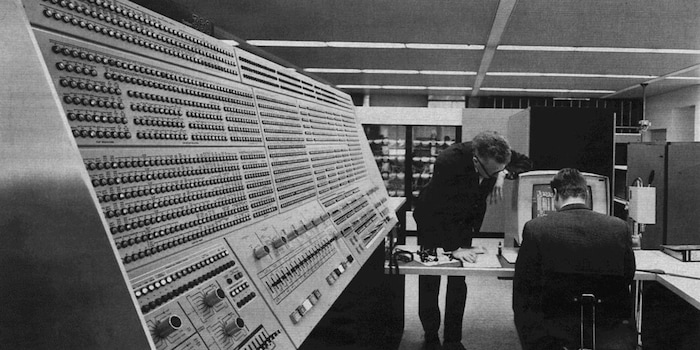

In the early days, digital computers were huge. They not only fuelled fears due to their dimensions, but were also seen as a threat to jobs. One company was central to the acceptance of computers: IBM.

At the beginning of the 1950s, a few companies were fighting over the still very small computer market. At that time, computers were primarily used for scientific purposes. Computer research was influenced by a key paper: "Preliminary Discussion of the Logical Design of an Electronic Computing Instrument", which was written in 1946 under the direction of mathematician John von Neumann.

In this paper, it was stated that computers should store data and programmes in binary code on a memory. This concept is the most important invention in the history of computers. This is because it enables a programme to regard another programme as data.

Most of the computers that were built in the following years were based on this concept. There were a few such models in the early 1950s.

It was not until 1954 that a market for business computers developed. The IBM 650 decimal computer was aimed at universities and companies. The IBM 650 cost 200,000 dollars and was therefore relatively inexpensive. That may sound like a lot, but compared to the scientific computer IBM 701, it was not much. It cost one million dollars. The company produced over 2000 units of the IBM 650. The model was the first mass-produced computer. IBM granted universities that taught computer science a discount of up to 60 per cent. The device therefore established itself primarily at universities.

However, the big breakthrough was a long time coming. One reason for this was that the computers of the time could only be operated by specialists. And the work of specialists was expensive. The devices were highly specialised and could only perform one computing task at a time. To save money, any work that could be done by a scientist was also done by a scientist.

Computers did not have a good public image. They fuelled fears that they would destroy jobs. This was also reflected in popular culture, for example in the film "A Woman Who Knows Everything" ("Desk Set"). When a computer is introduced in the company, the employees fear for their jobs.

The computers of the time could not do much more than Charles Babbage's Analytical Engine of the 1830s - but they were a lot faster. They could only handle text to a very limited extent. They could not display lowercase letters, for example. Mainframes were very expensive and found few takers. To make them accessible to more people, specific programmes had to be developed, such as word processing and databases. These types of programmes required programming languages to write them and an operating system to manage them.

Programmes for early computers had to be written in the language of the respective machine. The vocabulary and syntax of machine languages differed significantly from mathematical or our language. It was obvious that the translation had to be automated. Ada Lovelace and Charles Babbage had already realised this in the 1830s.

To do this, a translation programme written in machine language had to run on the computer. This feeds data to the target programme in machine language. Early higher programming languages were translated by hand for the machine, not by the computer. Herman Goldstine implemented this with flowcharts.

Higher-level programming languages were already being experimented with in the early 1940s. Shortcode was the first such language. William Schmitt integrated it into the UNIVAC - one of the first mainframe computers - in 1950. Shortcode worked in several steps. First, it converted alphabetic input into numeric code and this in turn into machine language. Shortcode was an interpreter. This means that the programme translated the input of the higher programming language - one after the other. This happened very slowly.

This is where compilers came to the rescue. Input from the higher-level programming language is no longer translated into numerical code first. The entire higher-level programming language is translated into machine language and saved for later use. Although the initial translation takes a long time, it can be called up more quickly at a later point in time.

In September 1952, Alick Glennie, a student at the University of Manchester, created the first compiler, which was also implemented. In the following years, various higher programming languages were developed with corresponding compilers. Because IBM wanted to gain a foothold in the computer business, the company published Fortran in 1957. The programming language made programming more accessible because it was possible to post comments in the programmes. These comments were ignored by the compiler. This meant that non-programmers could also read them and understand the programmes.

Also worth mentioning is Cobol. Cobol was based on the natural language. This made it easier to understand than Fortran, which led to better acceptance of computers from its release in 1959. The programming language was designed for business, whereas Fortran was intended for scientists.

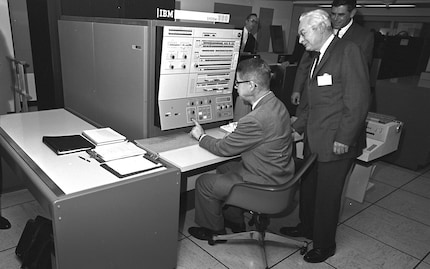

In order for computers to actually become useful beyond the sciences, however, a control programme was needed in addition to the higher programming languages. Today we call this the operating system. A system that orchestrates the other applications, organises the file storage and manages the peripherals. Once again, it was IBM that recognised the need for an operating system. With the IBM 360 operating system, the company would dominate the computer market for years to come.

The company entered the mainframe computer business in the mid-1950s with the IBM 650. The invention of the transistor led to IBM gradually switching from vacuum tubes to electronic semiconductors. These first transistor computers heralded the second generation of computers.

IBM had several specific computer series at this time: for science/engineering, for data processing, for accounting and supercomputers. In the early 1960s, the managers at IBM decided to put all their eggs in one basket and combine all these applications in one architecture. For an estimated 5 billion dollars, the company developed System/360.

IBM System/360 was more architecture than a single machine. Central to the architecture was the operating system, which ran on all 360 models and was available in three variants. For installations without hard drives, smaller installations with hard drives and larger installations with hard drives. The first 360 models from 1965 were computer hybrids made of transistors and integrated circuits. Today, these are referred to as the third generation of computers.

The 360 operating system led to a shift: computers were now valued according to their operating system and not their hardware. The financial risk involved in development paid off for IBM. Until the 1970s, the company from Armonk, New York, was the undisputed market leader.

From big data to big brother, Cyborgs to Sci-Fi. All aspects of technology and society fascinate me.

Practical solutions for everyday problems with technology, household hacks and much more.

Show all